Trailblazing AI: How Our Book and Researchers Are Making Global Waves

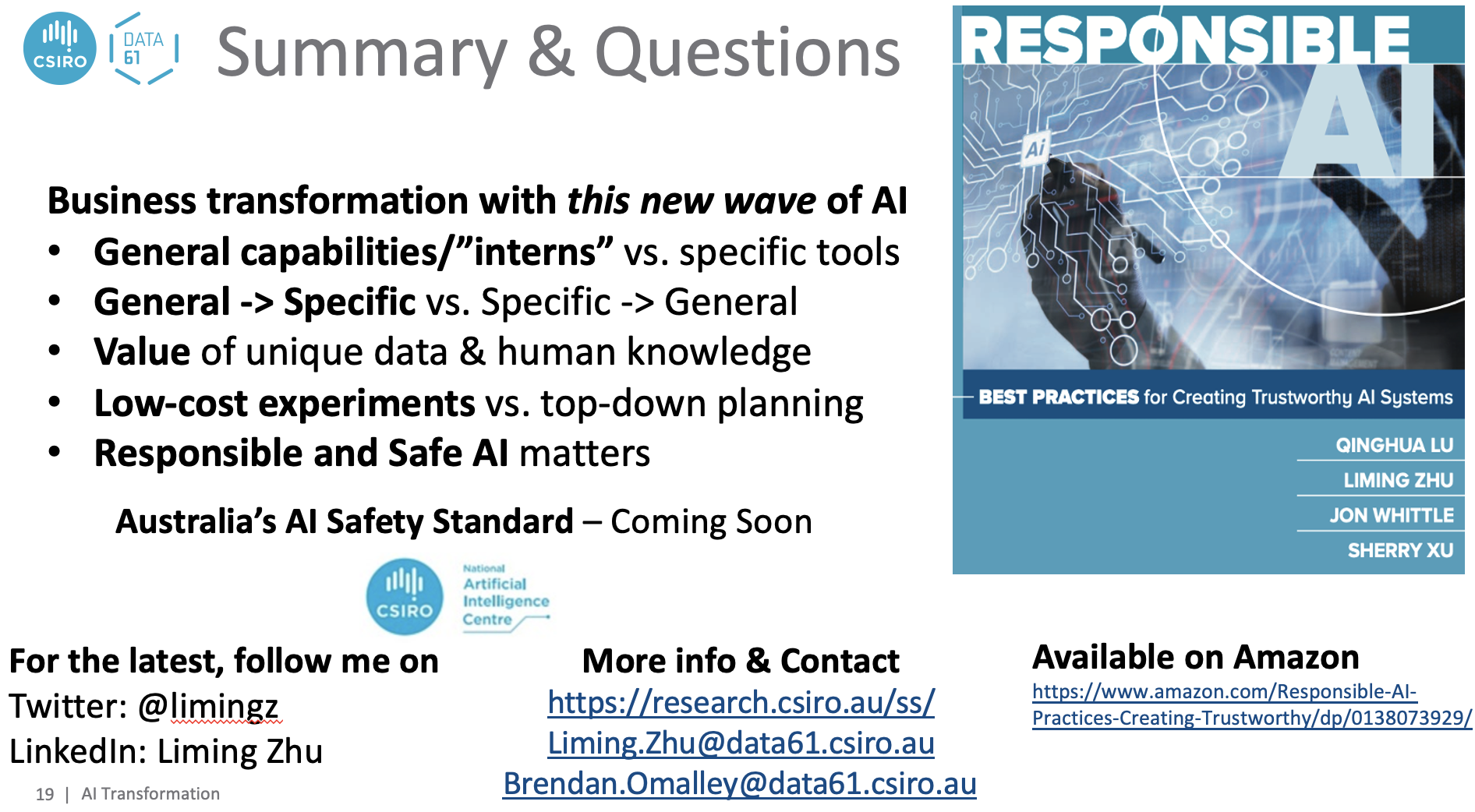

You know you’re too into your own book when you’re checking rankings like it’s your daily horoscope. Well, the stars aligned, and we’re effectively #3 for AI books globally! Shoutout to everyone who has supported our work! Let’s keep hashtag#ResponsibleAI and AI Safety at the heart of our efforts—it deserves our utmost attention! Order here:…