In my new CEDA – Committee for Economic Development of Australia opinion piece, https://lnkd.in/gjbK3Uej

I tackle three pervasive challenges I’ve observed:

1. The tendency to view responsible AI as nothing more than a buzzword or truism. Is AI really special, or can we simply graft AI considerations onto existing governance frameworks?

2. The communication gap between executives, board members, and high-level risk management professionals on one side, and AI engineers and experts on the other. Often, these groups talk past each other, diluting the efficacy of governance measures.

3. The denial of inherent trade-offs in implementing best practices for responsible AI. Many believe that simply following best practices solves all issues, neglecting the complexities and trade-offs that often require broader community involvement.

We argue that AI is unique in its ethical and governance challenges and that high-level principles must be deeply integrated with AI engineering practices. Moreover, the decisions around trade-offs are not solely internal managerial decisions; they should involve the community and external stakeholders.

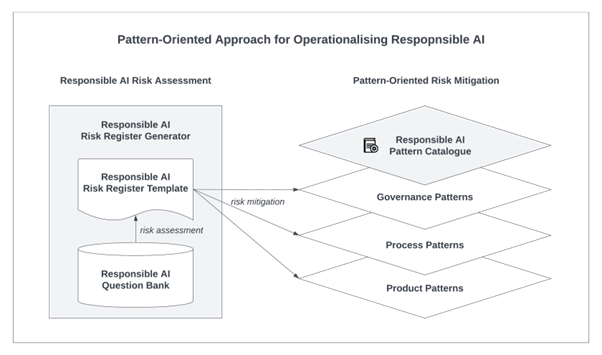

I point to CSIRO’s Data61‘s work in pattern-oriented approaches, offering reusable best practices that consider nuanced contexts, pros and cons, and interconnections across governance, process, and product dimensions.

https://lnkd.in/gkxVSKTz

The article is an adaptation from Chapter 3 of our upcoming book:

Qinghua Lu, Liming Zhu, Jon Whittle, Xiwei (Sherry) Xu 2024. Responsible AI: Best Practices for Creating Trustworthy AI Systems. Addison-Wesley. (pre-order from late 2023)

For the broader work we do, check out here: https://lnkd.in/gyzjE4-i