🤔 𝑯𝒐𝒘 𝒅𝒐 𝒚𝒐𝒖 𝒌𝒏𝒐𝒘 𝒊𝒇 𝑨𝑰 𝒎𝒂𝒌𝒆𝒔 𝒕𝒉𝒊𝒏𝒈𝒔 𝒃𝒆𝒕𝒕𝒆𝒓—𝒘𝒉𝒆𝒏 𝒚𝒐𝒖 𝒅𝒐𝒏’𝒕 𝒆𝒗𝒆𝒏 𝒌𝒏𝒐𝒘 𝒉𝒐𝒘 𝒈𝒐𝒐𝒅 𝒕𝒉𝒆 𝒄𝒖𝒓𝒓𝒆𝒏𝒕 𝒉𝒖𝒎𝒂𝒏 𝒑𝒓𝒐𝒄𝒆𝒔𝒔 𝒊𝒔? 𝑨𝒏𝒅 𝒘𝒉𝒆𝒏 𝑨𝑰 𝒆𝒙𝒑𝒍𝒂𝒊𝒏𝒔 𝒊𝒕𝒔𝒆𝒍𝒇, 𝒉𝒐𝒘 𝒅𝒐 𝒚𝒐𝒖 𝒌𝒏𝒐𝒘 𝒊𝒇 𝒚𝒐𝒖 𝒄𝒂𝒏 𝒕𝒓𝒖𝒔𝒕 𝒕𝒉𝒆 𝒆𝒙𝒑𝒍𝒂𝒏𝒂𝒕𝒊𝒐𝒏?

At the NZ Government Data Summit, I shared three important, often overlooked insights from real-world AI deployments:

– AI alone often outperforms AI-human collaboration. But where and how human oversight is introduced can either reduce or increase overall risk.

– Evaluating AI also reveals human and process errors—which can generate resistance, especially when current processes were never rigorously assessed.

– Getting AI to explain its recommendations or conclusions isn’t enough. What matters is whether those explanations meet recognised expert standards and make sense to human reviewers—not whether they reflect how the AI works internally.

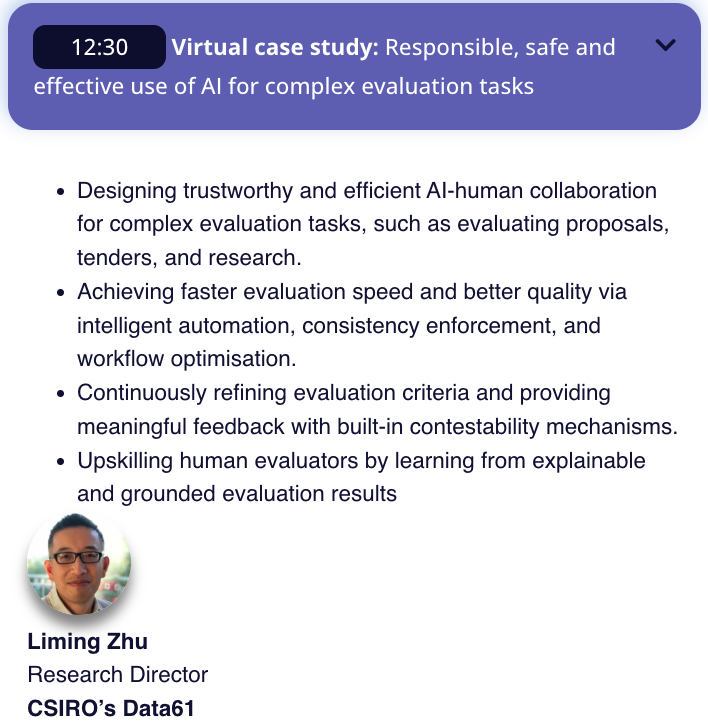

I demonstrated these with two case studies:

– 𝐀𝐈 𝐄𝐯𝐚𝐥𝐮𝐚𝐭𝐨𝐫: How to assess whether AI lowers or raises risk—even when the absolute risk of the current human process is unknown (using marginal risk evaluation techniques).

– 𝐀𝐈 𝐏𝐞𝐞𝐫 𝐑𝐞𝐯𝐢𝐞𝐰𝐞𝐫: How to ensure AI explanations are meaningful to humans—focusing not on whether they mirror the AI’s internal logic, but whether they align with expert judgement and procedural fairness (what we call external reasoning faithfulness).

Selected Slides Here https://www.dropbox.com/scl/fi/5hfxx1ae0kswj0dflaot7/20250508-AI-for-Evaluation-NZ-Gov.pdf?rlkey=wsufwwph4dl73zdkxaicx0suy&dl=0