For many who have followed my posts, you will know I have travelled the world recently to strengthen connections with many responsible AI and AI safety research organisations, including all the new AI Safety Institutes (AISI) and many national organisations/programs with a critical mass in responsible/safe AI.

CSIRO’s Data61 has built active collaborations with organisations in the US, UK, Canada, Japan, Singapore, India, Germany, and France on this topic, with a strong focus on AI system trustworthiness engineering and assessment (beyond AI models and organisational governance/management aspects). Australia is effectively leading the formation of this community. 🌟

One way for this community to exchange ideas and collaborate is through the workshops we organise and the organising/program committee members we invite to set the directions. We deliberately designed these to be hosted at different academic communities as we observe that these communities (AI/ML, Software/AI Engineering, Social Science/Law/Philosophy) do not talk to each other on this topic as much as we hope for. 💡

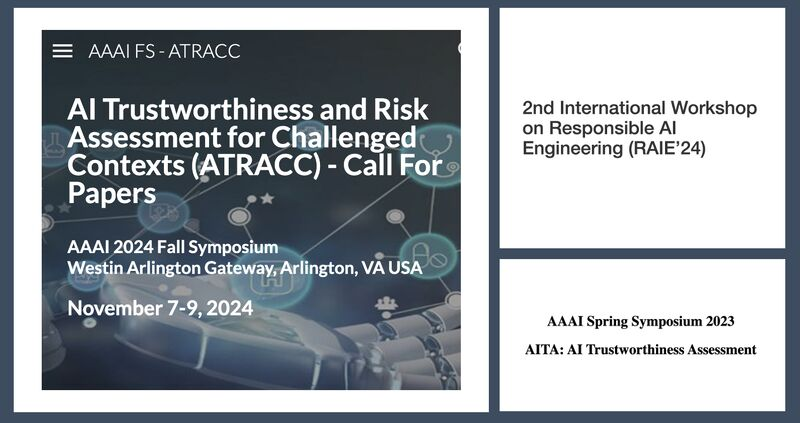

One ongoing one, co-organised by Qinghua Lu, is the 𝐑𝐞𝐬𝐩𝐨𝐧𝐬𝐢𝐛𝐥𝐞 𝐀𝐈 𝐄𝐧𝐠𝐢𝐧𝐞𝐞𝐫𝐢𝐧𝐠 (𝐑𝐀𝐈𝐄) workshop hosted at the premier software/AI engineering conference, ICSE. We just had our 2024 instalment https://lnkd.in/gWFfZwQi , and stay tuned for the 2025 info. 🔍

The other one is the 𝐀𝐈 𝐓𝐫𝐮𝐬𝐭𝐰𝐨𝐫𝐭𝐡𝐢𝐧𝐞𝐬𝐬 𝐀𝐬𝐬𝐞𝐬𝐬𝐦𝐞𝐧𝐭 (𝐀𝐈𝐓𝐀) hosted at the 𝐀𝐀𝐀𝐈 𝐒𝐲𝐦𝐩𝐨𝐬𝐢𝐮𝐦𝐬. We held this last year and are doing it again with an expanded community in November in Arlington, VA, USA. 𝐈𝐟 𝐲𝐨𝐮 𝐚𝐫𝐞 𝐢𝐧𝐭𝐞𝐫𝐞𝐬𝐭𝐞𝐝 𝐢𝐧 𝐣𝐨𝐢𝐧𝐢𝐧𝐠, 𝐩𝐥𝐞𝐚𝐬𝐞 𝐬𝐮𝐛𝐦𝐢𝐭 𝐩𝐚𝐩𝐞𝐫𝐬 𝐛𝐲 𝟐𝐧𝐝 𝐀𝐮𝐠𝐮𝐬𝐭 (𝐦𝐚𝐱𝐢𝐦𝐮𝐦 𝟖 𝐩𝐚𝐠𝐞𝐬 𝐟𝐨𝐫 𝐟𝐮𝐥𝐥 𝐩𝐚𝐩𝐞𝐫𝐬, 𝟒 𝐩𝐚𝐠𝐞𝐬 𝐟𝐨𝐫 𝐩𝐨𝐬𝐭𝐞𝐫/𝐬𝐡𝐨𝐫𝐭/𝐩𝐨𝐬𝐢𝐭𝐢𝐨𝐧 𝐩𝐚𝐩𝐞𝐫𝐬) https://lnkd.in/gVSkQgTf . 📄

More importantly, we are hoping for more people to get involved in the organisation and community from more diverse countries and organisations.

If you are part of a research organisation with some national programs or a critical mass on responsible and safe AI, especially with a system-level focus, feel free to reach out to me.

About Me

Director/Head of CSIRO’s Data61

Conjoint Professor, CSE UNSW

For other roles, see LinkedIn & Professional activities.

If you’d like to invite me to give a talk, please see here & email liming.zhu@data61.csiro.au